InfiniBox Supports Unlimited Snapshots

Learn how INFINIDAT enables an unlimited number of snapshots without any impact on performance. Transcript below:

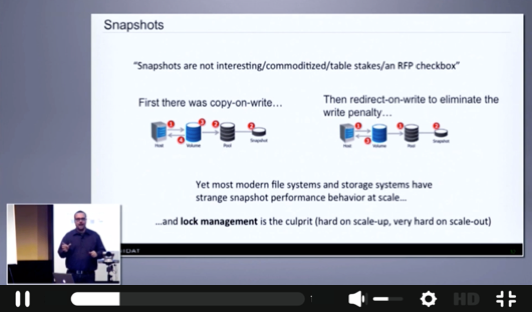

[Brian Carmody, CTO, INFINIDAT] The next thing that I'd like to share with you is some information about our snapshot implementation. And this is one of the lies that I would like to put out there. We hear this all the time, snapshots aren't interesting. There are table stakes. They're an RFP checkbox. Do you have them? Or you don't. So if you then talk to the technical salesperson, they will give you a little more information. They'll say there's good snapshots and bad snapshots. Bad snapshots are copy-on-write. Good snapshots are redirect-on-write.

What I am postulating is that they're both bad. Or maybe that's being too negative. There's room for improvement in both of these. And the reason you know that is true is every modern file system and storage system that I'm aware of and the US Patent Office is aware of has strange behavior that happens when you add large numbers of snapshots or a large velocity of creation of them. This is why one of the fundamental reasons why you see data sheets for storage products that go up to multi-petabytes, but you can only -- you know, you try to go above a fraction of that and then they tell you you need to buy another system.

What it all comes down to is lock management in the cache. In order to take a snapshot, you have to lock certain data structures in memory during the duration of the snap being taken. And if you don't, you can have an IO that's half in and half out of the snap. And its data corruption.

So one of the kind of dirty secrets in our industry is that storage developers all know and take for granted, but customer technologists, I find, don't really grok this. But modern storage systems, or all storage systems, spend a nontrivial amount of time being locked, where they're not servicing IO, where they're buffering IOs in their drivers, while management operations are occurring. And this is all for data consistency. It is a huge pain in the butt, this type of programming, under the best of circumstances. When you bring scale out technologies and distributed lock management, it is a nightmare.

So we developed a system that completely bypasses all of this. And let me-- we'll start here. OK. You remember that we can have in our VUA, we can have multiple addresses that point to the same piece of data. So for us, creating a snapshot is a pure metadata operation. It's the creation of pointers. It's inserting them into the trie. It is a zero time operation. There's no copying of data or anything like that.

This has profound implications, and not just for the performance of the snapshots, but also the way that you design your APIs. Everybody says they have a rest API, most of them are terrible. You execute a command, and then you have to keep calling back to see if it completed yet. It's gross. This allows us, when I say zero time, you want to create a snapshot even if it's a petabyte. It's the entire system or whatever the capacity is. You take it, it completes, its fixed, it's whatever it is, a fraction of a millisecond, and it's in.

Now, critically, we do this without any locking of our metadata whatsoever when we take a snapshot. So how do we take a snap without -- how do we guarantee integrity of snap groups without having a locking mechanism? In that 4K metadata page that's associated with every data section, we include a timestamp.

So we have timestamps for every write that has come into the system. Or more precisely, for every 64 kilobytes worth of written data. Because of this, a write is unambiguously in or out of a snap based on the timestamp of when the snap was created and the timestamp of all the pending IOs. It's either in or out, whether it occurred before or after. This allows us to have write integrity or data integrity across our snapshots without any locking of metadata whatsoever. The snapshot object itself also has a timestamp.

So what this means for customers is we support 100,000 snaps per system with no performance penalty whatsoever. By the way, we just made that number up. We asked customers what was the most number of snaps they needed. We took the biggest one and we doubled it. But if you're a customer and you need 200,000 snaps, call us. We'll test it. And if it's OK, which it will be, we will increase the limit on your system.

Number two, a volume with and without snapshots has indistinguishable performance. I can take a volume that's naked and is not mirrored and not with no local replication, no remote replication, and I can have one that has many, many replicas and copies. And they will have indistinguishable performance.

Finally, I got a question during the last briefing round at VMworld, how many snaps per second can your system? So I actually tested this over a VPN. I was able to issue 25 snaps per second pretty consistently to a snap group. Which by the way, try that on any existing system and it's not going to work. Not only that. Not only does the server accept it-- the storage system accept them and execute them, during that time, the latency will be razor flat. I challenge you, try this on any file system or any storage system that is commercially available today. Try to create a velocity of this many snaps, and you will see extremely -- if it's even able to keep up -- you will see extremely jagged latency curves, which is all about the locking and unlocking mechanisms. It's all about metadata.

Now, a classic example beyond business continuity where this starts to change customers' lives is, we tell them snap everything. Even if you don't have an SLA for an application for snapshots, snap it once an hour or once a day. And then if something goes wrong, software upgrade or whatever, you can always roll back. This changes customers' lives from a risk management perspective. When you have true zero penalty snaps, we don't charge for the feature. They're space efficient, 64 kilobyte granularity.

They're also what we build our replication engine on top of. The way our replication works is very simple. When you mirror a volume, we take a snapshot every n number of seconds. We ship that log. We apply it to the slave. And then once there's consensus that it applied, we click forward and we delete the previous snapshot. As a result, you can have a system with 100,000 whatever replicated volumes, or one big one, literally replicating the entire array. And we can guarantee a four second recovery point objective.